Métiers Médias

Métiers Médias

What does virtual production have in common with the…

There’s a family resemblance between these two technologies, and they are closely related. But the full answer is nuanced.

Virtual production – which is a family of techniques that use computer-generated backgrounds to replace real-world scenery – has a lot in common with Metaverse technology. If it’s hard to precisely understand how they’re related, it’s not because virtual technology is hard to comprehend, but because the metaverse is.

Asking « what’s the relationship of virtual production to the metaverse is like saying « what’s the relationship of a hamster to the universe? » The answer would be the same whether we’re talking about hamsters, gerbils, elephants or pianos. The universe contains everything, so it includes these objects (animate and inanimate) as a matter of logic.

In its own way, the metaverse also contains everything. It does this in the sense that there will only ever be one metaverse: if there were two or more metaverses, they wouldn’t be metaverses. There have already been a few examples of companies excitedly claiming to have built a metaverse. But they haven’t. That would be like saying « Dave’s built an internet ». It doesn’t even make sense.

But while the universe is an « existence platform » – actually, it’s the existence platform – the metaverse is a virtual, extended and augmented reality platform. Other technology fields can contribute to it or at least use similar or related techniques to different ends.

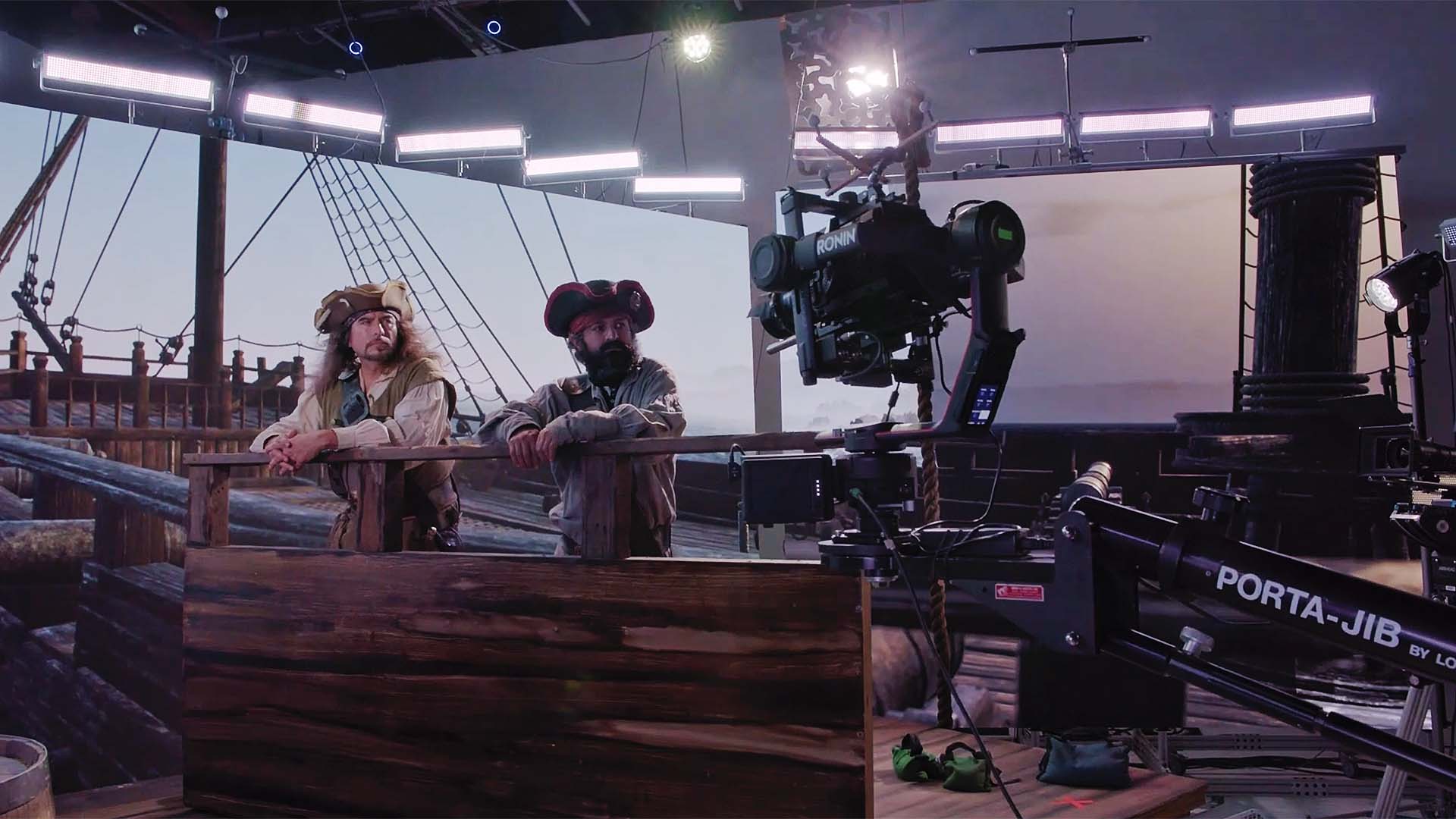

Our Flag Means Death, a virtual production.

What the Metaverse has in common with virtual production

Metaverse technology does have an awful lot in common with virtual production. They both generate scenes in real-time, and those images are keyed either to the movement of a camera or an individual viewer. Up to a point, the technology is very similar, largely because what the two have in common is games engines – or, more accurately, what games engines will become. (Nvidia’s Omniverse shows what can happen when metaverse-type thinking is applied to real-world problems).

Computer-generated graphics have been around for decades. Early computer games were pretty clunky at the consumer level, although 8-bit graphics have become a bit of a trendy cultural meme. At least this reminds us how far the technology has come. Computer-generated films have always looked better than games because the computers rendering the images don’t have to do it in real-time. Subject only to the 3D algorithms available at the time, you could have images of almost any quality if you were prepared to wait for them – even hours or days per frame.

But the constraint of real-time has until recently limited the quality of graphics in virtual production. In parallel, it has taken until quite recently for LED screens to have enough detail (referred to as « pixel pitch ») to be convincing on a virtual production set. But games engines, aided by frankly astonishingly powerful GPUS, have passed the threshold of believability for cinema. It’s still hard to portray CGI humans convincingly, but recent developments like Metahumans have started to get very close.

It’s tempting to say that real-time photorealistic rendering is the key to a successful metaverse, and by inheritance, since the technologies are so closely related, virtual production. But there’s a lot more to it than that. On a virtual set – and don’t forget that most virtual production stages have live-action as well; otherwise you wouldn’t need a set – there’s a lot of precision engineering. That’s how you get effective camera tracking, and convincing lighting, which can either come from clever cinema lights that pick up colours from the CGI backgrounds or from supplementary video screens whose job is solely lighting the set consistent with the overall mixed CGI and live-action scene.

Where they differ

But where virtual production and the metaverse differ hugely is in their scope and ambition. The metaverse wants to be what the internet will become, with interconnected and interactive spaces, representing fantasy or simulated reality. The potential is that the metaverse will become a digital twin of our real world, where we can move the dial to crossfade into worlds made by our own imagination, someone else’s, or probably both. It will be both the room where we watch television – or whatever television becomes – and the content itself.

Virtual production is likely to become both a supplier of content for the metaverse, and an app running in it. It’s worth remembering that the metaverse is not just something you only see wearing a head-mounted display. It will be all around us, immersive or not. Many of these metaverse manifestations will be better characterised as Extended Reality or Augmented Reality. The metaverse will run on our watches, phones, and car dashboards. It will provide centimetre-accurate data for autonomous cars. And for virtual production, it will become an optimised visual experience for film and TV production. The metaverse and virtual production are evolving rapidly in similar directions, and each will feed into the other. And each will be better for it.

Ensuite la mise sur le papier du découpage du clip vient la phase de création à proprement parler.

Avec pour objectif d’atteindre une performance maximum de la stratégie de votre vidéo institutionnelle, il est nécesssaire de planifier toute étape de la production du clip institutionnel. Informations complètes en matière des films institutionnelsen retrouvant les infos sur ce site. La rédaction du planning, le dérushage du film, l’enchainement de rushes en post-création sont bien évidemment des étapes incontournables mais il est nécessaire de les planifier en conservant la possibilité d’adjoindre des corrections et améliorations de la vidéo corporate à la dernière minute. Cela permet de viser convenablement le discours de communication de votre vidéo institutionnelle.

Publications sur le même thème:

Fabien Lebrun.,Ouvrage .